Boost your knowledge

with Glassflow

Stay informed about new features, explore use cases, and learn how to build real-time data pipelines with GlassFlow.

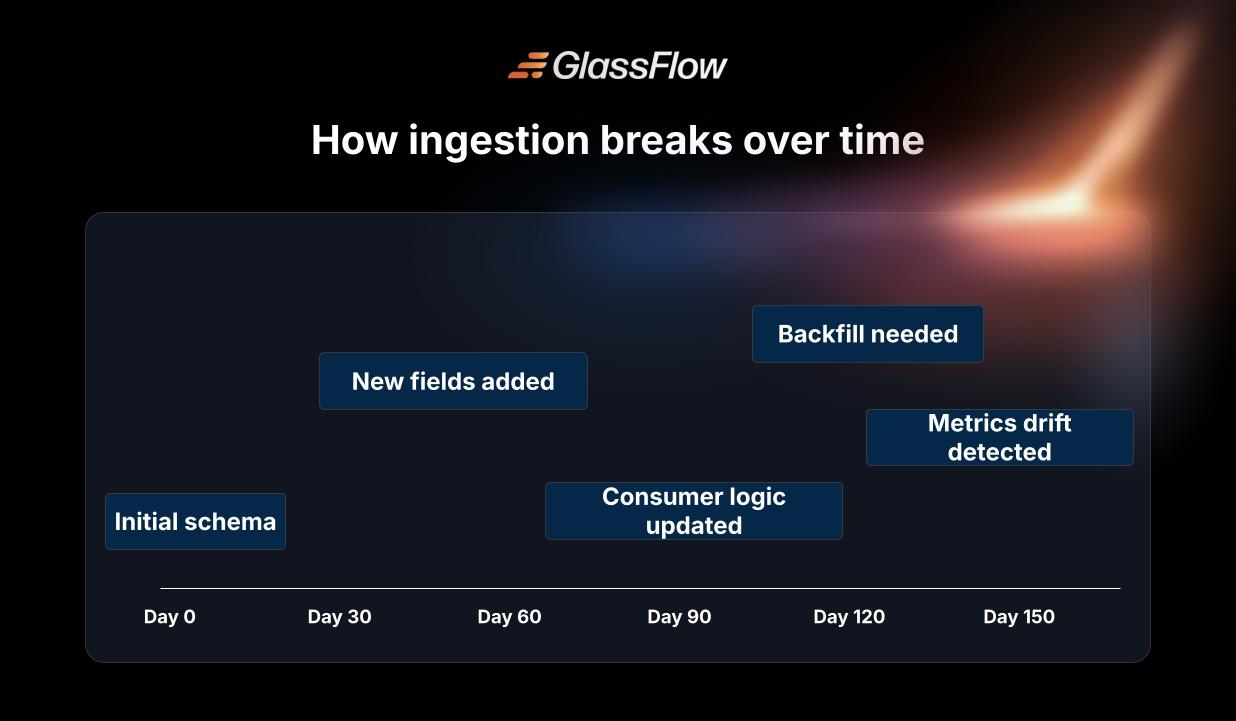

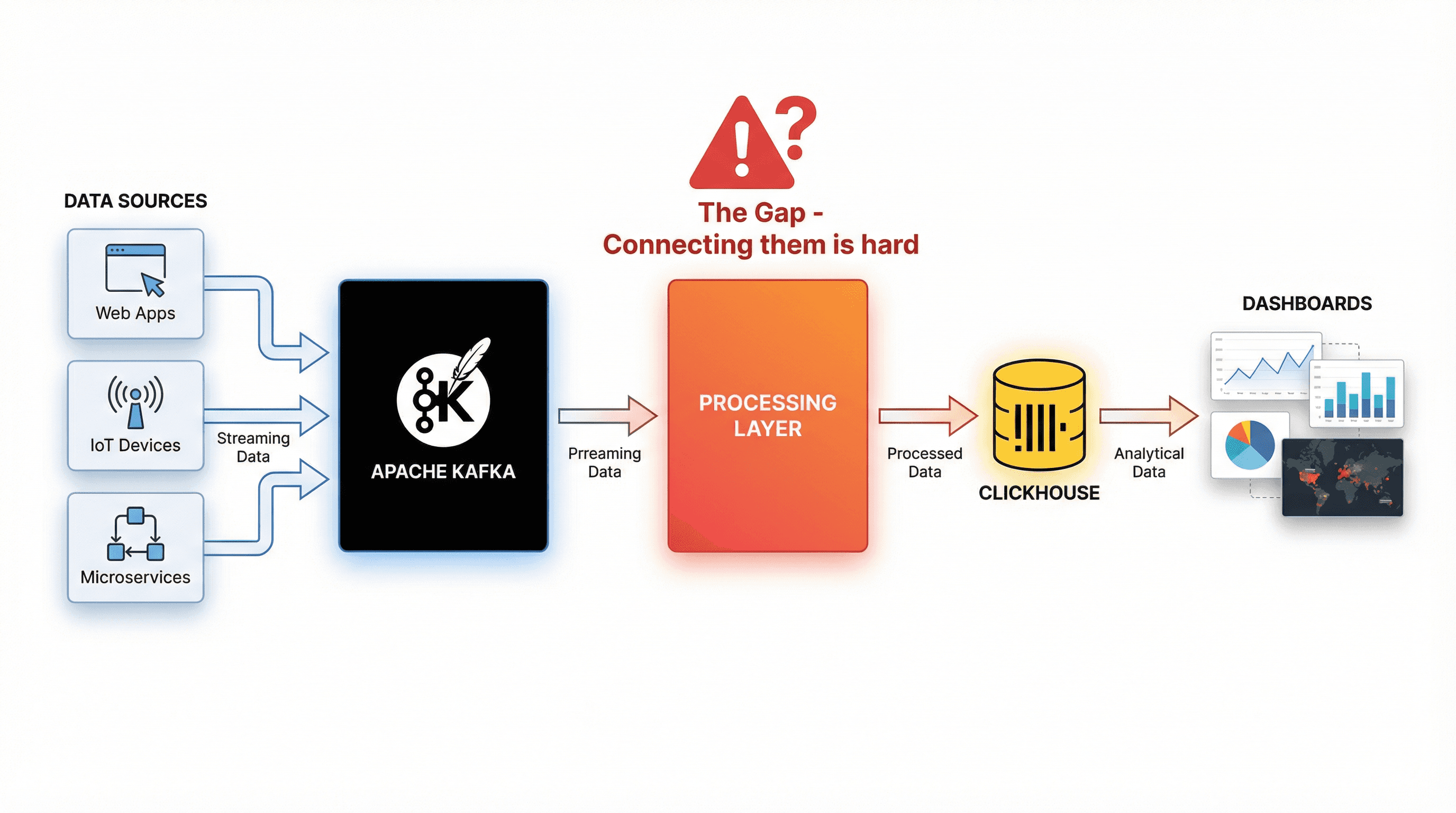

Transformed Kafka data for ClickHouse

Get query ready data, lower ClickHouse load, and reliable

pipelines at enterprise scale.