Delivers query ready data, lower ClickHouse load, and reliable pipelines at enterprise scale.

Dedupe

Fast joins

Stateless transformations

Late event handling

Reduces load on ClickHouse

Low maintanance effort

Dead-letter-queue

Pipeline

Observability

Open source

Enterprise Support

Deployment Service

CH Kafka Table Engine

Needs RMT

ClickPipes

for Kafka

Needs RMT

Self-Built Go Service

Needs Custom Code

Needs Custom Code

Needs Custom Code

Needs Custom Code

Vector.dev

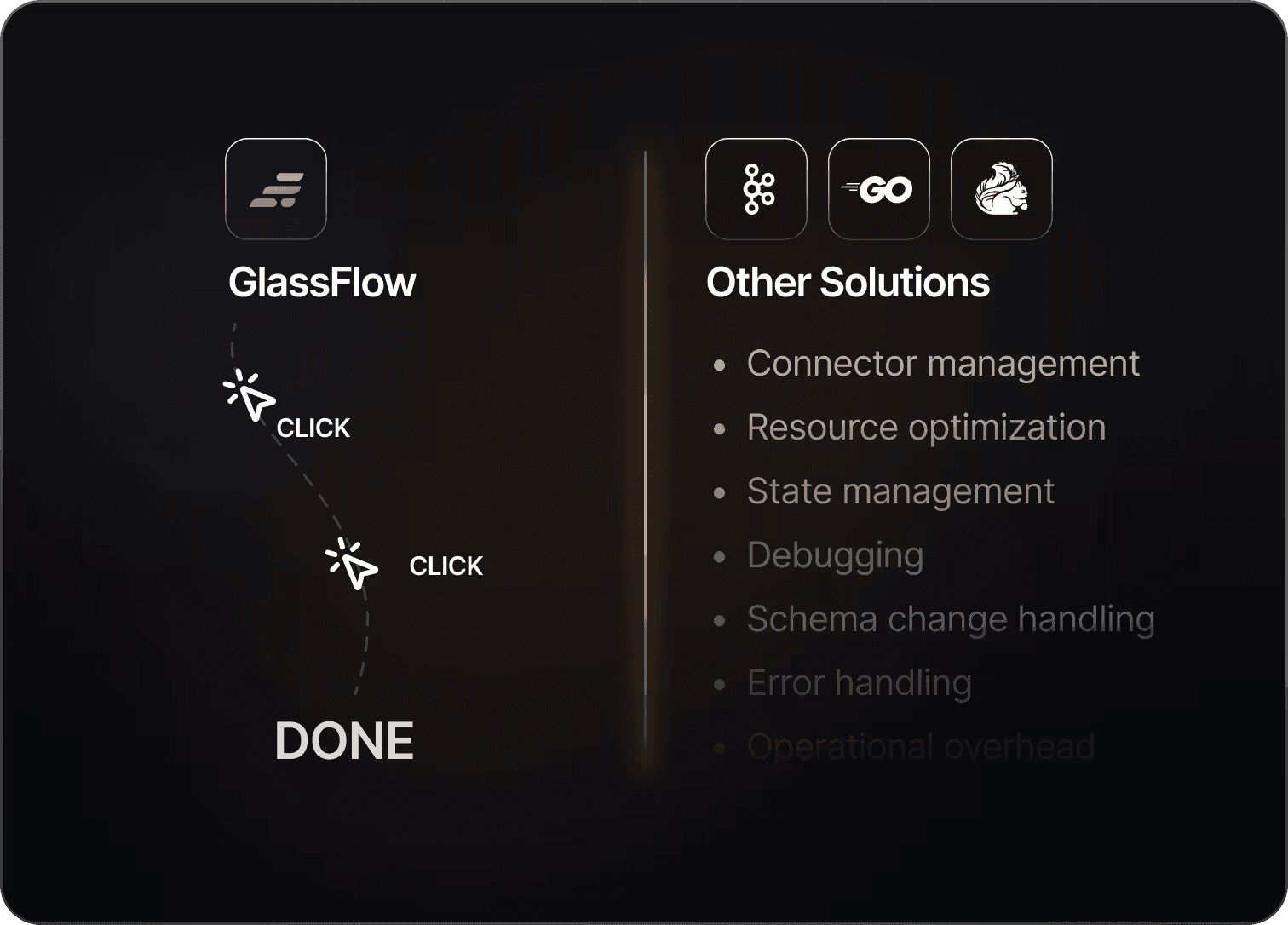

Deduplication with one click.

Select the columns as primary keys and enjoy a fully managed processing without the need to tune memory or state management.

7 days deduplication checks.

Auto detection of duplicates within 7 days after setup to ensure your data is always clean and storage is not exhausted.

Batch Ingestions built for ClickHouse.

Select from ingestion logics like auto, size based or time window based.

Joins, simplified.

Define the fields of the streams that you would like to join and GlassFlow handles execution and state management automatically.

Stateful Processing.

Built-in lightweight state store enables low-latency, in-memory deduplication and joins with context retention within the selected time window.

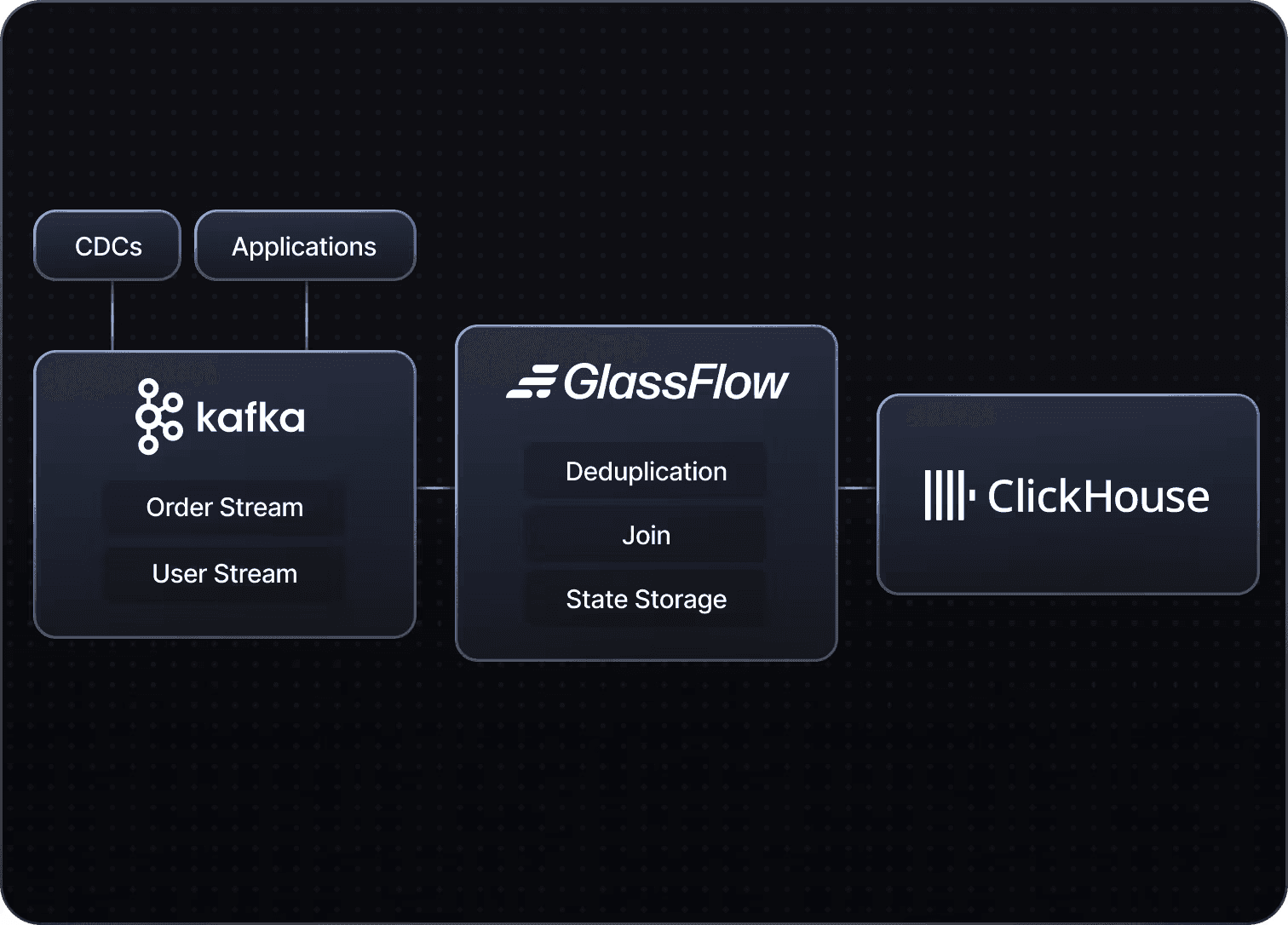

Managed Kafka and ClickHouse Connector.

Built and updated by GlassFlow team. Data inserts with a declared schema and schemaless.

Auto Scaling of Workers.

Our Kafka connector will trigger based on partitions new workers and make sure that execution runs efficient.

KAFKA TO CLICKHOUSE: A PRACTICAL GUIDE

This ebook covers everything you need to know about building Kafka → ClickHouse pipelines.

A serverless, production-ready setup for building and transforming event-driven data pipelines, with support for APIs and Webhooks.

Simple Pipeline

With GlassFlow, you remove workarounds or hacks that would have meant countless hours of setup, unpredictable maintenance, and debugging nightmares. With managed connectors and a serverless engine, it offers a clean, low-maintenance architecture that is easy to deploy and scales effortlessly.

Accurate Data Without Effort

You will go from 0 to a full setup in no time! You get connectors that retry data blocks automatically, stateful storage, and take care of late-arriving events built in. This ensures that your data ingested into ClickHouse is clean and immediately correct.

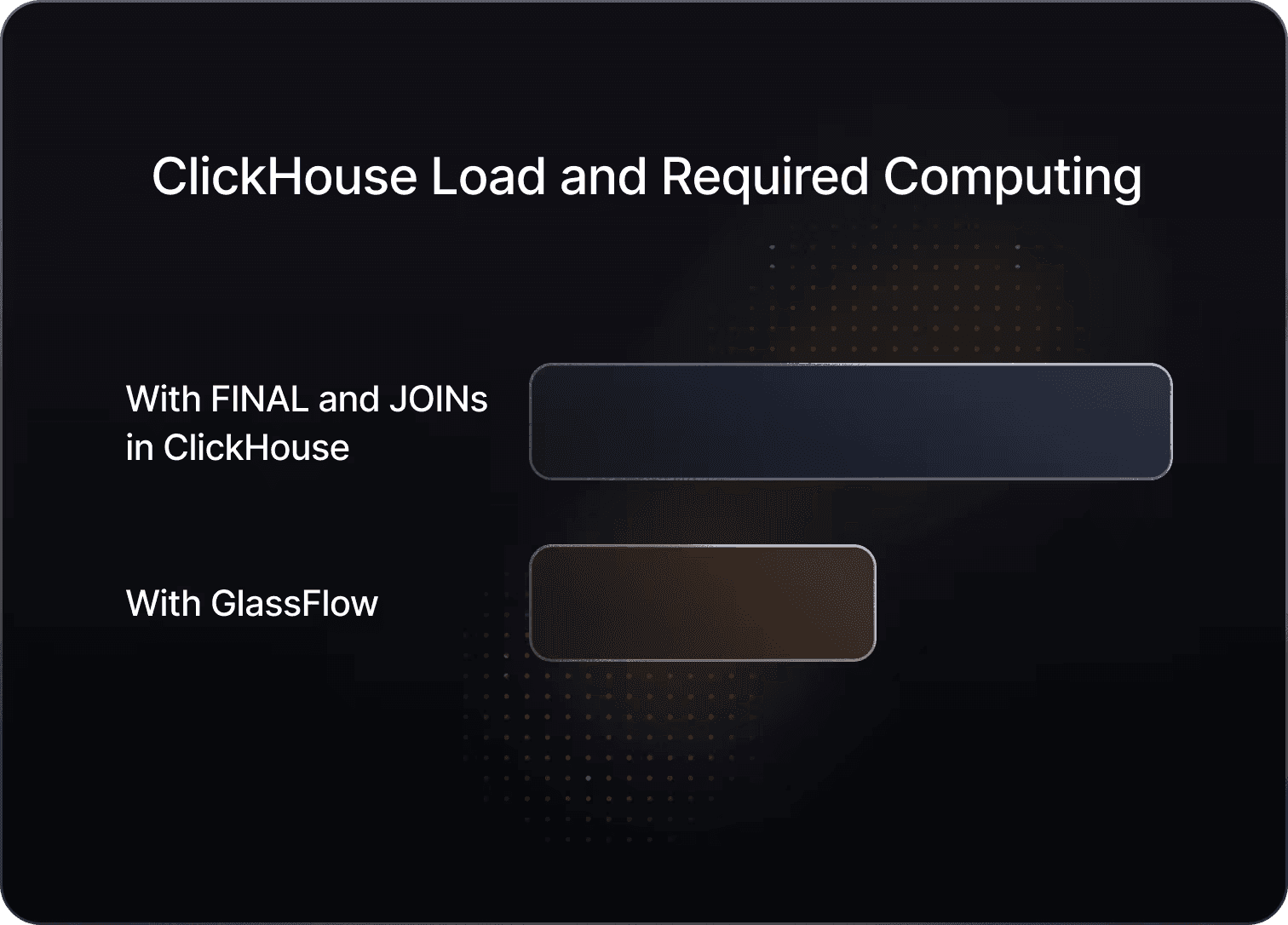

Less load for ClickHouse

Because of removing duplicates and executing joins before ingesting to ClickHouse, it reduces the need for expensive operations like FINAL or JOINs within ClickHouse. This lowers storage costs, improves query performance, and ensures ClickHouse only handles clean, optimized data instead of redundant or unprocessed streams.

Feel free to contact us if you have any questions after reviewing our FAQs.